Businesses, developers, and researchers constantly look for ways to use large amounts of data. Data is essential for gaining insights, making informed decisions, and creating innovative applications. Data collection tools are more advanced than ever. With the increasing volume of data on the Internet, individuals and companies continue to discover new ways to utilize this information. Scraping APIs is one of the most effective ways to collect data from the web.

The scraping API is a new way to help extract and analyze data by combining API and data scraping. This article explains what scraping APIs are, what they do, their benefits, how to use them, and the best ways to use them for your business.

What Is Web Scraping?

Web scraping means getting lots of information from different places on the Internet. For example, when you look at a website that shows products from many other stores, they use web scraping to get that information for you. It helps people and companies compare prices and make good choices.

Web scraping can be challenging because your computer's address might get blocked from doing too many searches, which can look like spam. Proxy servers help with this by giving you different addresses so you can keep searching for information without getting blocked.

What Is an API?

API stands for Application Programming Interface. It's like a bridge between your device and all the information it can access. Think of it as a virtual messenger. When you want to find out about travel spots, tell your messenger to get that info. The messenger then asks the travel app for the best places to visit at reasonable prices and shares that info with you.

APIs form the foundation of all your online activities. Without them, we wouldn't be able to make any virtual connections, and we would have a lot of information on the Internet but no way to access it.

What Is a Scraping API?

A scraping API also called a Web Scraping API, is a tool that lets users extract data from websites using programming. A unique scraping API tool helps collect organized information from certain websites, databases, or programs. It makes it easier to study the market, understand what the competition is doing, and combine different data sets. Businesses can use a scraping API to get information from other places without doing it all by hand. This ensures that the information they gather is correct and fits together.

Octoparse is a beneficial tool for getting information from the Internet. It can gather various data from websites, like product details, prices, and customer reviews. You can tell Octoparse what you're looking for, and it will find all the information you need. This tool helps study the market, see what the competition is up to, and make decisions based on data.

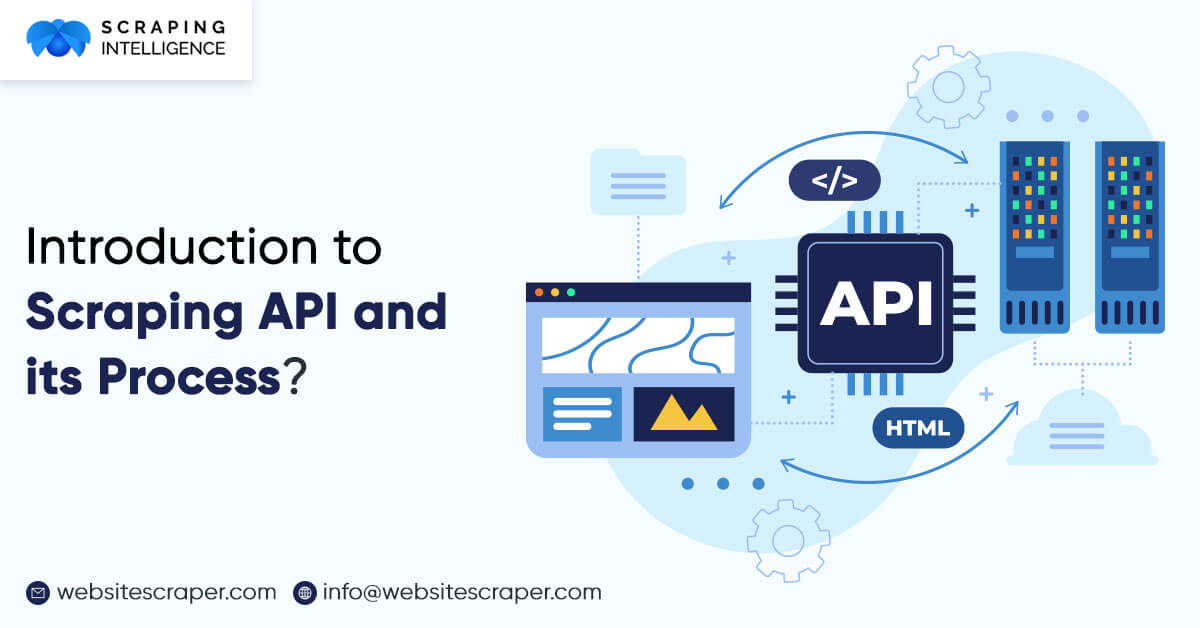

How Does a Scraping API Work?

As an expert audience, you may be interested in the detailed breakdown of how a scraping API functions:

Request Initiation

The process begins with a user or application requesting the scraping API and specifying the target website and the data to be extracted. Customized requests may include parameters to identify specific elements or data points on the web page.

Fetching the Web Page

The scraping API sends an HTTP request to the target website, just like a web browser fetches a page when a user visits a URL. The API grabs the HTML content of the page, including all visible text, images, and underlying code.

Parsing the HTML

After obtaining the HTML content, the scraping API analyzes the HTML to find the required data.

Data Extraction

The identified data is extracted from the HTML using the API, and this data may encompass text, links, images, and other elements as per the initial request.

Handling Dynamic Content

Websites today often use JavaScript to load content in a dynamic way. Scraping APIs can handle this by simulating a browser environment using tools like headless browsers. These tools run JavaScript code to make sure that all dynamically loaded content is completely displayed before it is extracted.

Data Cleaning and Transformation

The extracted data may need to be cleaned and transformed so that it can be used. It means eliminating unwanted characters, formatting dates, making text look the same, and changing data into organized formats like JSON, CSV, or XML.

Delivering the Data

The cleaned and formatted data will be sent back to the client in the requested format. The client can then use this data for different purposes, such as analysis, reporting, or integrating it into other systems.

Error Handling and Retries

The API has built-in ways to handle problems like network errors, timeouts, or changes in the target website's structure. It can automatically retry requests and adjust parsing rules to extract data successfully.

What Are the Benefits of Scraping API?

Using web scraping APIs allows you to extract information from the Internet easily. Here are the main benefits

- Time Efficiency: Scraping APIs helps you get information from websites faster. They automate the process and save a lot of time compared to doing it manually.

- Accuracy: APIs ensure the information we get is correct by providing organized and dependable data. This helps lower the chance of mistakes.

- Scalability: APIs easily manage information from different sources and make it simple to collect more data when necessary.

- Resource Optimization: Using the Internet smartly to get information helps reduce the pressure on the user's device and the website's server.

- Real-time Data Acquisition: APIs provide the most up-to-date information, which is vital for businesses that rely on current data for decision-making.

- Dynamic Data Extraction: Scraping APIs can adjust to website changes, so they can still get new data even when the websites are updated.

- Error-Free Execution: Good error handling in APIs ensures that data is collected correctly, which means we don't have to check it as much manually.

- High-Quality Information: APIs provide accurate and trustworthy information, ensuring that the data collected is of high quality.

- Streamlined Data Accessibility: You can change how you get information to match your needs, making it easier to use.

- Integration Capability: APIs work well with other tools and platforms, making it easy to combine data and add it to your existing activities.

- Cost-Effectiveness: It's often cheaper to use APIs for data scraping than to do it manually or create custom scraping tools from the beginning.

- Comprehensive Data Retrieval: APIs collect a lot of information, which helps to analyze data in detail and see the big picture.

- Adherence to Protocols: Using web scraping APIs means following the rules of websites and keeping user information private. This ensures that we are following the law and being fair to everyone.

- Support Availability: Many APIs provide help and information to help users solve any problems that arise when getting data.

- Continuous Improvement: Ongoing improvements to API functions help data come faster to keep up with new technology and changing needs.

What Are the Disadvantages of Scraping APIs?

When considering the use of scraping tools to gather data from websites, it's essential to be mindful of the following aspects:

- Before utilizing scraping tools, it is vital to ensure they align with the website's terms and local regulations to avoid legal implications.

- When using scraping tools, it's essential to be mindful of the potential impact on the website's servers to maintain a positive user experience.

- Recognize that the data obtained may require validation for accuracy and timeliness, as well as the need to be prepared for potential changes in the accessibility of this data.

- In the event of changes or disruptions to the external APIs, contingency plans must be in place to mitigate any impact on your application's functionality.

- Regular maintenance and monitoring are essential due to potential changes in API endpoints, authentication mechanisms, or data structures, which may require adjustments to your scraping processes.

- Adhering to rate limits set by APIs is crucial to ensuring ongoing access to data and avoiding potential disruption.

- When dealing with sensitive or personal data, ensure compliance with data privacy regulations and handle the information responsibly.

- Overcoming technical challenges like pagination, authentication, error handling, and data interpretation can be rewarding and strengthen technical expertise.

- Factoring in resource requirements, such as server bandwidth, storage, and processing power, is essential for a sustainable data-gathering operation.

- Practicing ethical and responsible use of scraping tools is imperative in contributing positively to the digital ecosystem.

API Scraping vs. Independent Web Scraping: Which Method is Superior?

When you decide between using APIs and doing web scraping on your own, it mostly depends on what you need, what you want to achieve, and what resources you have. Using APIs means you use specific access points provided by the website to get data in a structured way, like JSON or XML. This method is often more reliable and faster because APIs are made for sharing data, so they respond quickly and are less likely to stop working if the website changes. Also, APIs usually come with instructions that make them easier to use.

On the other hand, independent web scraping means collecting data directly from a website's HTML. It can be helpful when an API is unavailable or doesn't give the needed data. Web scraping gives you more flexibility, letting you collect visible data on a webpage. However, it also has some challenges: web pages often change, which can stop your scraping from working; it requires more complicated ways to understand the data; and it might cause legal or ethical problems, especially if it breaks the website's rules.

In most cases, using APIs is better for getting data because it's organized and dependable. But if APIs are unavailable or not good enough, using web scraping on your own can be an alternative. Remember that web scraping is more complicated and can have more problems. It's essential to think about these things to decide the best way to get the data you need.

Bottom Line

To sum up, scraping APIs helps get information from different places on the Internet. It allows businesses, developers, and researchers to collect data automatically for analysis, automation, and connecting with other systems. It's essential to understand how APIs work, like sending requests and handling responses, and consider legal and ethical issues. By following the rules, respecting limits, and obeying laws and terms of service, users can make the most of API scraping while avoiding problems. As technology and regulations change, staying informed and flexible to handle the challenges of scraping APIs is essential.

With the help of experts from Scraping Intelligence, scraping APIs can be helpful for businesses. They make it easy to gather and analyze data, which can help decision-makers make better choices to stay ahead of their competition. This article is here to help you understand what a scraping API is and how to use it to benefit your business.

Incredible Solutions After Consultation

- → Industry Specific Expert Opinion

- → Assistance in Data-Driven Decision Making

- → Insights Through Data Analysis