Amazon, the world’s largest online store, has revolutionized how people sell and shop online. The financial year ended on December 31, 2023, with the company recording net sales of nearly $575 Billion on Amazon. The majority of it is generated from its vast array of products. However, it also sells services and products of third-party merchants, from subscriptions to its other offers, such as Amazon Prime and cloud storage and computing (AWS). Indeed, nearly 60% of all Amazon sales are conducted by third-party sellers using Amazon Marketplace. These sellers rely on Amazon’s Fulfillment by Amazon or FBA, which means Amazon will take care of packing, shipping, and fulfillment of orders. The best-known and commonly used payment method at Amazon is the purchase through this service; it accounts for 50% of all transactions.

Therefore, by 2024, it will be very important for businesses and individuals to collect data from Amazon if they want to sell online. With the help of special tools and APIs, users can collect a lot of relevant data regarding Amazon products. Scraping Intelligence's advanced data scraping services assist businesses in knowing what is trending and creating achievable strategies to stay ahead of competitors.

What is Amazon Review Scraping?

Amazon Review and Rating Scraping is a process of mining customers’ reviews and ratings from Amazon's product page. This data is of great worth as it captures customer feedback, how a product is faring, or what customers feel/dislike about certain products. People interested in business or marketing, researchers, and analysts frequently use web scraping to gather Amazon review and rating data.

Highlighting Statistics

- According to Amazon Stats, Amazon visitors finish 28% of all purchases within 3 minutes and 50% within 15 minutes.

- By 2023, the average annual total sales of sellers whose base was located in the United States were more than $250000.

- The net sales revenue online of Amazon itaped nearly 575 billion U.S. dollars by the year 2023.

- With a worldwide reach and popularity, Amazon is regarded as one of the most valuable brand assets.

- According to the Statista report, most of Amazon’s revenue comes from its e-retail sales of various product categories, followed by third-party revenues from various sellers' products, retail and media subscriptions, and AWS cloud services.

- Amazon is a marketplace where third-party sellers (3P) are vendors. Sellers use Amazon as an e-commerce site to present their products; 3P sales represent around 60% of total paid ASMs.

- According to Statista reports, e-sales of service and Amazon's e-commerce business boosted the company's net revenue from 2004 to 2023.

- The multinational e-commerce company's total net revenue in the last reported year was about 576 billion USD, compared to 514 billion USD in 2022.

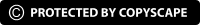

Why Scrape Amazon Product Reviews?

Amazon reviews and ratings can be a transformational aspect for businesses, which helps them in creating achievable strategies and staying competitive in the evolving market. Here’s why Amazon product review data scraping can be done:

Market Analysis and Product Information

Scrapping reviews provides businesses with information on what customers say about a product. They have information on what customers like and dislike about products. Through the analysis of lots of feedback, enterprises can determine the trends of customers' requirements, new features they wish to be added, and areas they feel the products or services are lacking. Scrapping reviews for competitor products help businesses compare them with other products, identify weak areas, and find ways to beat the competitor.

Sentiment Analysis

A sentimental analysis of such posts is essential to determining customers' general satisfaction with the business. Businesses can monitor fluctuating customer attitudes following the launch of a new product, a general update or promotion, or marketing practices.

New Product Development & Enhancement

Scrapped reviews can also reveal concepts such as additional features or the need for functional enhancements. Businesses can use this information to improve their products' creation by including customers' demonstrated features. The regular reports of commercially available products regarding certain aspects (for instance, durability, performance, etc.) directly indicate areas of concern for quality control.

Personalized Marketing and Targeting

By using reviewer information like location or preference, businesses can identify their potential audience clearly and market to them accordingly. Ad managers can design better advertisement messages because the recurrent features customers mention emphasize the features valued by the latter.

Competitor Benchmarking

Website scraping of competitors can help to get an idea of what customers think of other similar products and services, including aspects such as price range or specifics of the offering. Reviewing competitors' product reviews helps business organizations determine the image of rival brands and opportunities for their brands.

Controlling Product Position and Growth

Scraping reviews is vital in profiling a business because it shows whether the product is thriving or has failed. Customer and market surveys and ratings give business organizations an idea of how long a product will take in the market and the future demands for that particular product. Additionally, customer reviews can sometimes highlight specific markets that are different and new that companies can take advantage of, hence being an advantage.

Steps to Scrape Amazon Review Data?

Following a certain procedure can help you get the specialized expertise needed for Amazon data scraping.

Step 1: Choose the right tool or API for Amazon scraping.

Step 2: Set up the environment to be scraped

Step 3 Decide which Amazon data needs to be scrapped.

Step 4: Configure the chosen data scraper to extract the required data.

Step 5: Start your scraper and collect the data.

Step 6: Analyze and process the scraped data

bs4: Python package called Beautiful Soup (bs4) extracts data from XML and HTML files. This module is not automatically included in Python. Enter the following command in the terminal to install this.

pip install requests

Before we start web scraping, we need to finish a few preparatory procedures. Bring in all the necessary modules. Obtain the cookies ' information before submitting a request to Amazon; without it, you couldn't scrape. Add your requested cookies to the header; if you don't have cookies, you won't be able to scrape Amazon data and will constantly get an error.

The getdata() function (User Defined Function) will request a URL and return a response if you pass it in. We are utilizing the get function to retrieve data from a particular server via a provided URL.

Syntax: requests.get(url, args)

Transform the data into HTML code so that bs4 can parse it.

Now, filter the required data using soup. The function Find_All.

Program:

# import module

import requests

from bs4 import BeautifulSoup

HEADERS = ({'User-Agent':

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) \

AppleWebKit/537.36 (KHTML, like Gecko) \

Chrome/90.0.4430.212 Safari/537.36',

'Accept-Language': 'en-US, en;q=0.5'})

# user define function

# Scrape the data

def getdata(url):

r = requests.get(url, headers=HEADERS)

return r.text

def html_code(url):

# pass the url

# into getdata function

htmldata = getdata(url)

soup = BeautifulSoup(htmldata, 'html.parser')

# display html code

return (soup)

url = "https://www.amazon.in/Columbia-Mens-wind-\

resistant-Glove/dp/B0772WVHPS/?_encoding=UTF8&pd_rd\

_w=d9RS9&pf_rd_p=3d2ae0df-d986-4d1d-8c95-aa25d2ade606&pf\

_rd_r=7MP3ZDYBBV88PYJ7KEMJ&pd_rd_r=550bec4d-5268-41d5-\

87cb-8af40554a01e&pd_rd_wg=oy8v8&ref_=pd_gw_cr_cartx&th=1"

soup = html_code(url)

print(soup)

Output:

Note: This just includes HTML code or raw data.

Now that the fundamental setup is finished, let's examine how scraping for a particular purpose can be accomplished.

Scrape the name of the client

Use class_ = a-profile-name and the span tag to locate the customer list. As seen in the image, visit the webpage in the browser and perform a right-click to verify the relevant element.

To utilize the find_all () method, you need to supply the tag name, attribute, and corresponding values.

Code:

def cus_data(soup):

# find the Html tag

# with find()

# and convert into string

data_str = ""

cus_list = []

for item in soup.find_all("span", class_="a-profile-name"):

data_str = data_str + item.get_text()

cus_list.append(data_str)

data_str = ""

return cus_list

cus_res = cus_data(soup)

print(cus_res)

Output:

[‘Amaze’, ‘Robert’, ‘D. Kong’, ‘Alexey’, ‘Charl’, ‘RBostillo’]

Scrape user review

To find the customer reviews, use the same steps as before. Use a given tag—div in this case—to find a unique class name.

def cus_rev(soup):

# find the Html tag

# with find()

# and convert into string

data_str = ""

for item in soup.find_all("div", class_="a-expander-content \

reviewText review-text-content a-expander-partial-collapse-content"):

data_str = data_str + item.get_text()

result = data_str.split("\n")

return (result)

rev_data = cus_rev(soup)

rev_result = []

for i in rev_data:

if i is "":

pass

else:

rev_result.append(i)

rev_result

Scraping Review Image

To obtain photo links from product reviews, follow the same steps. As seen above, findAll() receives the tag name and attribute.

def rev_img(soup):

# find the Html tag

# with find()

# and convert into string

data_str = ""

cus_list = []

images = []

for img in soup.findAll('img', class_="cr-lightbox-image-thumbnail"):

images.append(img.get('src'))

return images

img_result = rev_img(soup)

img_result

Saving data into CSV file

In this instance, the data will be saved as a CSV file. Prior to being exported as a CSV file, the data will first be converted into a data frame. We will examine the process of exporting a Pandas DataFrame to a CSV file and save a DataFrame as a CSV file using the to_csv() method.

import pandas as pd

# initialise data of lists.

data = {'Name': cus_res,

'review': rev_result}

# Create DataFrame

df = pd.DataFrame(data)

# Save the output.

df.to_csv('amazon_review.csv')

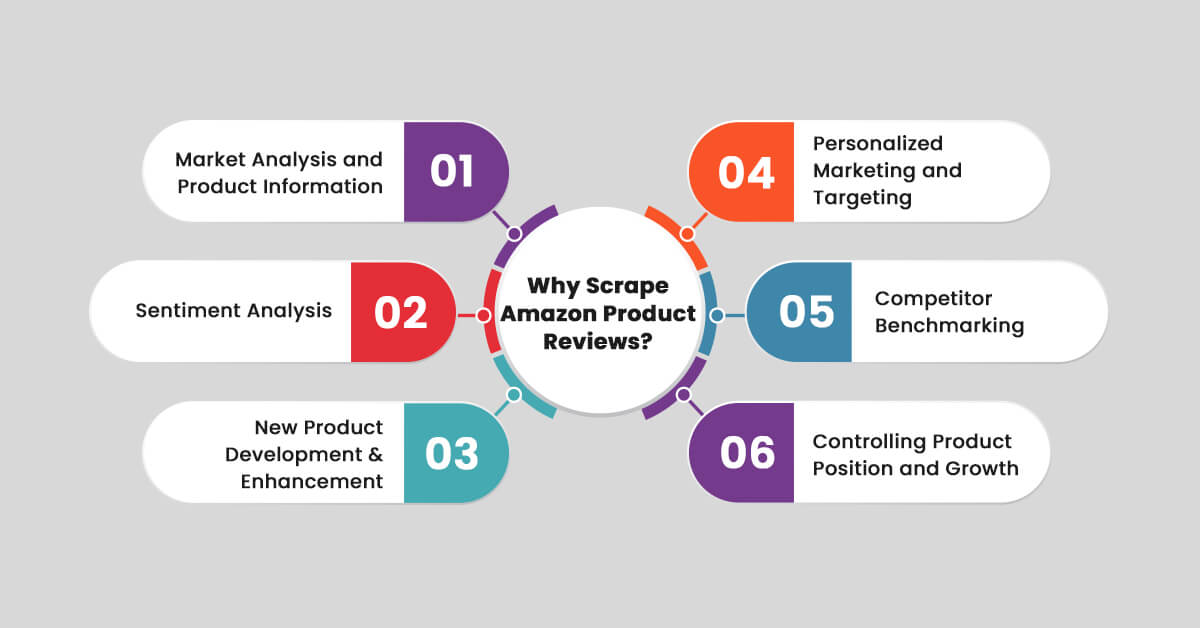

How to Avoid Getting Blocked by Amazon?

It is necessary to carefully follow Amazon's standards and employ ethical tactics in order to avoid getting barred for review and rating scraping. Here are a few tips to assist you stay protected from blocking:

Respect Amazon's Robots.txt

Check Robots.txt: It is crucial to always include a stop in Amazon's robots.txt file to determine which pages are allowed or disallowed for crawling. Accessing several prohibited pages is prohibited in Amazon OS and can cause an account to be banned almost immediately.

Use Proxies

To notice this, Amazon will look at the number of requests you make, and If all the requests originate from the same IP address, then you, Amazon, will. Switching the Proxy server after some time and using the proxy servers to change the IP address is recommended, which is quite like the act of pretending to have arrived from two different places every time.

Residential Proxies: This is also more viable than using data center ones since it emanates IP addresses of actual homes, the residential proxies, which Amazon cannot easily detect.

Rotate User Agents

A "user agent" informs Amazon which browser you employ like Chrome, Firefox, or another. It should be changed often to give the impression of completely different people using different browsers.

Respect Amazon's Rules

The robots.txt file exists in Amason's root directory and informs you which parts of the site you are allowed to scrape. Be sure to obey the following rules so you do not become banned. Requesting many pages at once may slow the Amazon servers, which is not good and may lead to your being blocked. Do not send too many requests; better yet, spread them over different time intervals.

Solve Captchas

If Amazon considers you a bot, they might offer you a CAPTCHA—the distorted, silly illustrations that test. Apply for services that can do these for you instantly or pause the process if one is seen.

Use Headless Browsers

It's possible to scrape data using Selenium or Puppeteer while the webpage is rendered in the second plane so the user does not see the browser. Nevertheless, some sites, such as Amazon, may recognize headless browsers. You can play with settings to decrease the likelihood of detection but use them carefully.

Make Use Of APIs Instead Of Scraping

If feasible, do not scrape the page and try first to use any bugs, like Amazon's Product Advertising API, to get Product Details and reviews. This is an official method of getting data while avoiding the risk of being blocked. The data that one can request is controlled, and the frequency with which the requests can be made is also regulated.

Conclusion

Outsourcing to Scraping Intelligence helps businesses fine-tune their products and strategies with the right data set. We assist clients in overcoming general scraping issues, such as blocking IPs and CAPTCHAs, to get around the problem and continue scraping. Collecting data from Amazon can be very helpful for businesses because it contains information on customer experiences, ratings, and trends. This data assists firms in making better decisions about which products to develop and put into the market. It also assists in conducting sentiment analysis.

Incredible Solutions After Consultation

- → Industry Specific Expert Opinion

- → Assistance in Data-Driven Decision Making

- → Insights Through Data Analysis