What is Web Scraping?

You can construct your web scraping programs to help monitor retailers for better product analysis by writing a few characters of Python. We all have a product page from the best-selling platform ‘Amazon’. This blog is specifically designed to help you develop a simple and practical Python script to accomplish your scraping amazon bestsellers data goals. We’ll start by following the rules listed below:

After that, it’s critical to keep track of product prices over time by storing the data in a secured database. This is useful for determining the historical trend. Now you must arrange the script to execute at specific times throughout the day. You may also set up an email alert for whenever the prices drop below your set limit.

Starting the Process:

BeautifulSoup will be your go-to tool if you need to scrape pricing from several house listings. You may quickly access certain tags via an HTML website with the help of Beautiful Soup.

TRACKER PRODUCTS.csv is a spreadsheet that contains the product links that you want to track. You can save your relevant data in a file called ‘search history’ after executing the coding script.

This proves the perfect time to retrieve our CSV file which is known as TRACKER_PRODUCTS.csv and saving it in the tracker’s folder from the repository. Then, we can then execute the file using Python. This will assist to enable HTML for more access.

Which Selectors Must be Used?

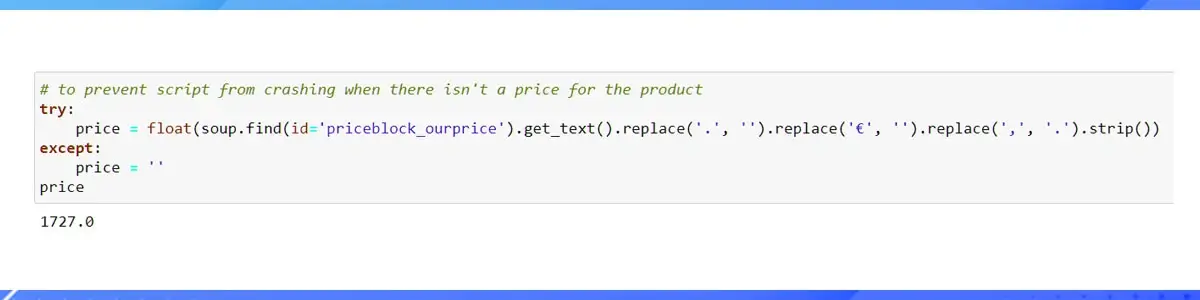

When you see soup.find, it simply implies you’re looking to find a component using an HTML tag such as span or div. Name, class, and id are examples of attributes that can be included in such elements. CSS selectors must be used in combination with soup.selectors. The inspect tool could also be used to assist your browser and navigate the page in its place. SelectorGadget, a Chrome extension, can be useful for this since it can securely guide you to the correct HTML codes.

Evaluating the BeautifulSoup for scraping Amazon Products

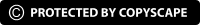

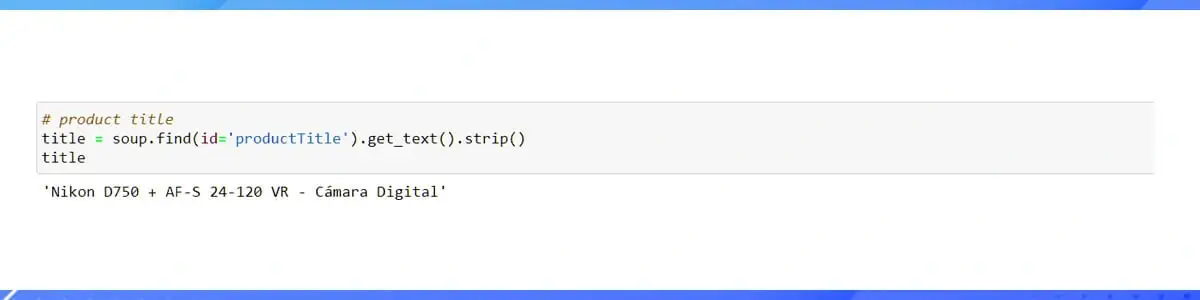

Getting the correct product title can be as simple as following the code. However, obtaining the pricing section can be difficult, but it can also be done by adding a two to three-liner code at the finish. There is a modification to the code in the final version of the script to enable the functionality of the rates in USD.

A web scraper’s testing section includes increasing number of times you try it out. Every expert may be faced with the task of writing and testing a web scraper for the same reason. The goal is to find the exact pattern of HTML that can always get the correct part of the page. Web scraping tools offer a variety of innovative features that allow us to access the target HTML page for data in less time.

Active Script

As seen in the accompanying images, gaining access to all the individual ingredients is simple. After you’ve completed your testing, you’ll need to develop a good script that can:

Accessing URLs from the CSV file Scrape every product information and save the data in a suitable format using a loop. All previous results must be included in the excel spreadsheet as well. To write the script, you’ll need a coding standard. You can use any code editor, however, Spyder version 4 is very beneficial. Create a new file called Amazon Scraper.py and save it.

Tracker Products

There are some things to keep in mind while working with the TRACKER PRODUCTS.csv file. It is quite easy to use and comprises primarily of three columns: URL, buy below, and code. To keep track of the pricing listings, you’ll need to put your item URLs here.

Search History

The SEARCH HISTORY file should be treated similarly. You should add a blank file to the search history repository for the first run. Line 116 of the script examination revealed the folder within the search history. Just with Amazon Scraper in the previous example, you can quickly replace the text in the folder.

Price Notification

Line 97 of the previous script contains a part in which you can write an email with an alert if the price falls below your defined limit. This will occur every time the price reaches the target level.

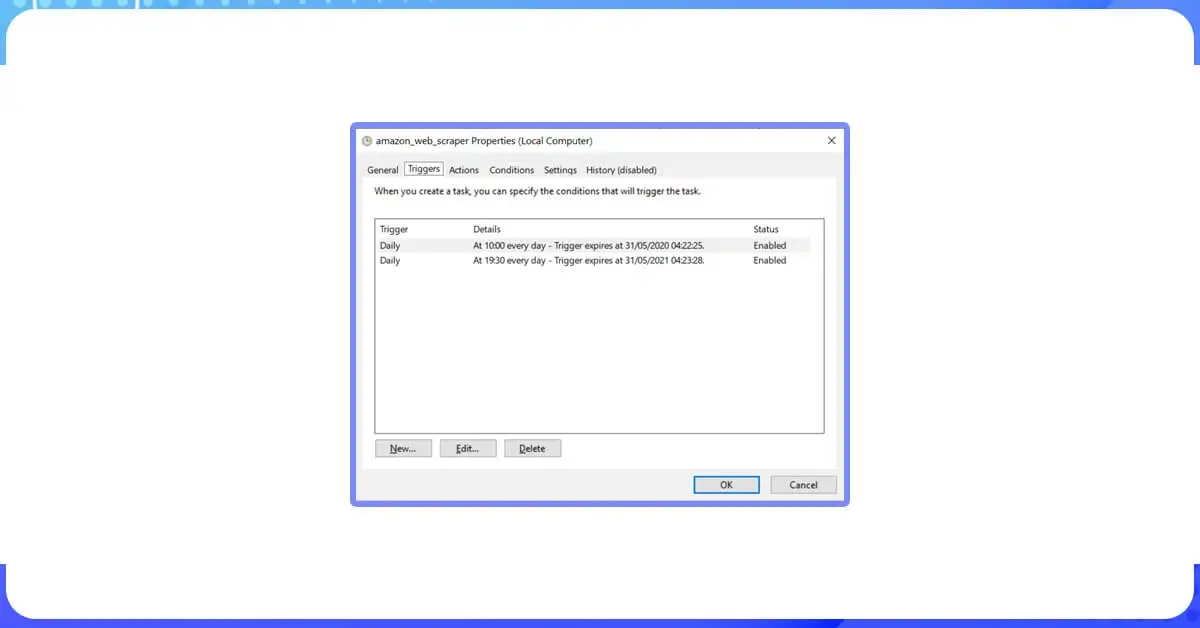

It is necessary to create an automated task to run the specified script. You can plan a task by going through the procedures listed below:

The first step is to open the ‘Task Scheduler.’ It’s as simple as pressing the window key and inputting the command. Then pick the ‘Create Task’ option, followed by the ‘Triggers’ tab.

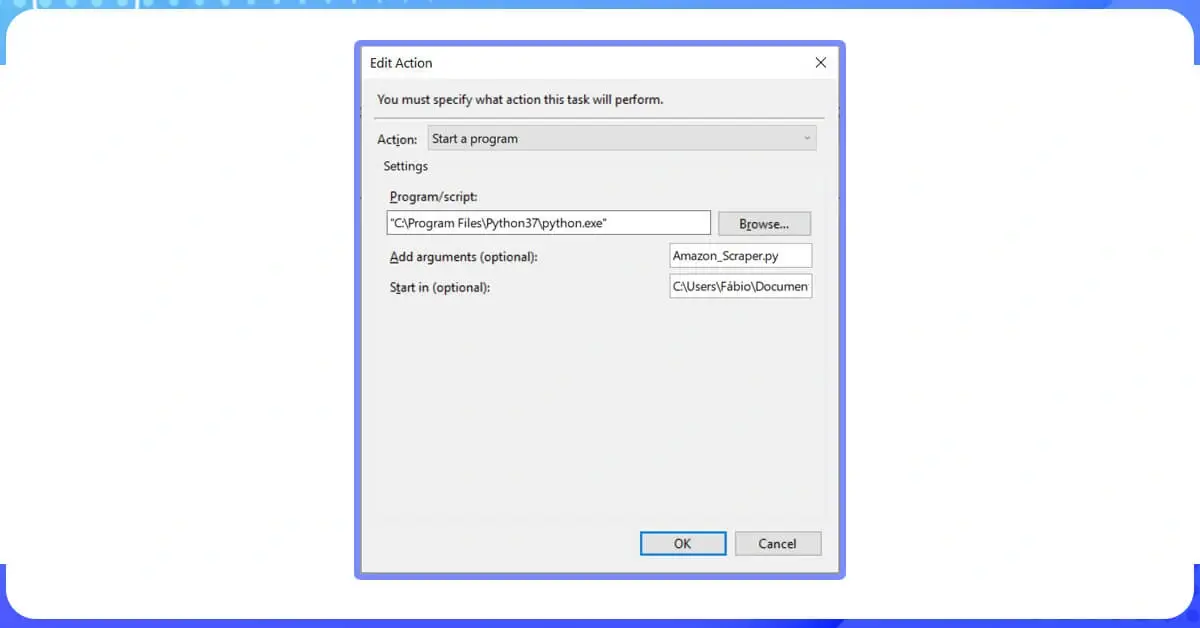

You can proceed to the action tab in the next step. You can add an action in this section by selecting the path of your Python folder in the ‘Script/Program’ box. Ours is found in the software files folder (look at the above image for your reference).

With this function, you can easily type in your file name within the argument box.

This tells our system to start with the inserted command action in the file containing the Amazon Scraper.py file. The job is now prepared to start. You can now cover a range of additional alternatives for evaluating the script and making it work for your better business. This is the most basic technique of scheduling your code, which may be performed automatically using a Window frames setup file.

Contact Scraping Intelligence for Scraping Amazon Bestsellers with Python!!!